Yet other classifiers

Contents

# Install the necessary dependencies

import os

import sys

!{sys.executable} -m pip install --quiet pandas scikit-learn numpy matplotlib jupyterlab_myst ipython

12.3. Yet other classifiers#

In this second classification section, you will explore more ways to classify numeric data. You will also learn about the ramifications for choosing one classifier over the other.

12.3.1. Preparation#

We have loaded your build-classification-model.ipynb file with the cleaned dataset and have divided it into x and y dataframes, ready for the model building process.

12.3.2. A classification map#

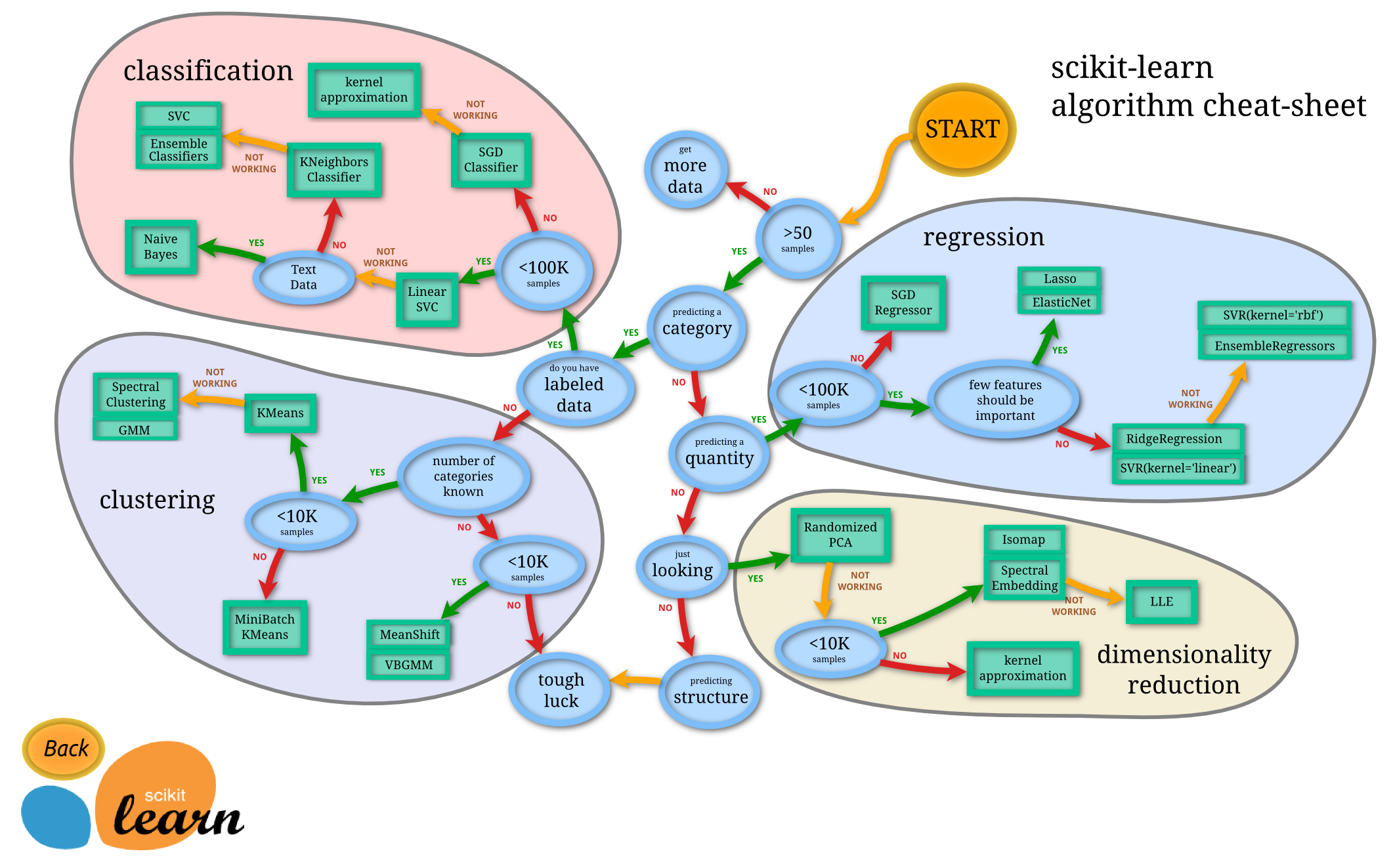

Previously, you learned about the various options you have when classifying data using Microsoft’s cheat sheet. Scikit-learn offers a similar, but more granular cheat sheet that can further help narrow down your estimators (another term for classifiers):

12.3.2.1. The plan#

This map is very helpful once you have a clear grasp of your data, as you can ‘walk’ along its paths to a decision:

We have >50 samples

We want to predict a category

We have labeled data

We have fewer than 100K samples

✨ We can choose a Linear SVC

If that doesn’t work, since we have numeric data

We can try a ✨ KNeighbors Classifier

If that doesn’t work, try ✨ SVC and ✨ Ensemble Classifiers

This is a very helpful trail to follow.

12.3.3. Exercise - split the data#

Following this path, we should start by importing some libraries to use.

1. Import the needed libraries:

from sklearn.neighbors import KNeighborsClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier, AdaBoostClassifier

from sklearn.model_selection import train_test_split, cross_val_score

from sklearn.metrics import accuracy_score,precision_score,confusion_matrix,classification_report, precision_recall_curve

import numpy as np

import pandas as pd

cuisines_df = pd.read_csv("https://static-1300131294.cos.ap-shanghai.myqcloud.com/data/classification/cleaned_cuisines.csv")

cuisines_feature_df = cuisines_df.drop(['Unnamed: 0', 'cuisine'], axis=1)

cuisines_label_df = cuisines_df['cuisine']

2. Split your training and test data:

X_train, X_test, y_train, y_test = train_test_split(cuisines_feature_df, cuisines_label_df, test_size=0.3)

12.3.4. Linear SVC classifier#

Support-Vector clustering (SVC) is a child of the Support-Vector machines family of ML techniques (learn more about these below). In this method, you can choose a ‘kernel’ to decide how to cluster the labels. The ‘C’ parameter refers to ‘regularization’ which regulates the influence of parameters. The kernel can be one of several; here we set it to ‘linear’ to ensure that we leverage linear SVC. Probability defaults to ‘false’; here we set it to ‘true’ to gather probability estimates. We set the random state to ‘0’ to shuffle the data to get probabilities.

12.3.4.1. Exercise - apply a linear SVC#

Start by creating an array of classifiers. You will add progressively to this array as we test.

1. Start with a Linear SVC:

C = 10

# Create different classifiers.

classifiers = {

'Linear SVC': SVC(kernel='linear', C=C, probability=True,random_state=0)

}

2. Train your model using the Linear SVC and print out a report:

n_classifiers = len(classifiers)

def classify():

for index, (name, classifier) in enumerate(classifiers.items()):

classifier.fit(X_train, np.ravel(y_train))

y_pred = classifier.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print("Accuracy (train) for %s: %0.1f%% " % (name, accuracy * 100))

print(classification_report(y_test,y_pred))

classify()

Accuracy (train) for Linear SVC: 80.2%

precision recall f1-score support

chinese 0.66 0.76 0.71 216

indian 0.90 0.90 0.90 249

japanese 0.81 0.76 0.78 243

korean 0.84 0.75 0.79 245

thai 0.81 0.84 0.82 246

accuracy 0.80 1199

macro avg 0.80 0.80 0.80 1199

weighted avg 0.81 0.80 0.80 1199

The result is pretty good.

12.3.5. K-Neighbors classifier#

K-Neighbors is part of the “neighbors” family of ML methods, which can be used for both supervised and unsupervised learning. In this method, a predefined number of points is created and data are gathered around these points such that generalized labels can be predicted for the data.

12.3.5.1. Exercise - apply the K-Neighbors classifier#

The previous classifier was good, and worked well with the data, but maybe we can get better accuracy. Try a K-Neighbors classifier.

1. Add a line to your classifier array (add a comma after the Linear SVC item):

classifiers['KNN classifier'] = KNeighborsClassifier(C)

classify()

Accuracy (train) for Linear SVC: 80.2%

precision recall f1-score support

chinese 0.66 0.76 0.71 216

indian 0.90 0.90 0.90 249

japanese 0.81 0.76 0.78 243

korean 0.84 0.75 0.79 245

thai 0.81 0.84 0.82 246

accuracy 0.80 1199

macro avg 0.80 0.80 0.80 1199

weighted avg 0.81 0.80 0.80 1199

Accuracy (train) for KNN classifier: 76.1%

precision recall f1-score support

chinese 0.68 0.75 0.71 216

indian 0.84 0.83 0.84 249

japanese 0.69 0.85 0.76 243

korean 0.93 0.60 0.73 245

thai 0.74 0.78 0.76 246

accuracy 0.76 1199

macro avg 0.78 0.76 0.76 1199

weighted avg 0.78 0.76 0.76 1199

The result is a little worse.

See also

Learn about K-Neighbors

12.3.6. Support Vector Classifier#

Support-Vector classifiers are part of the Support-Vector Machine family of ML methods that are used for classification and regression tasks. SVMs “map training examples to points in space” to maximize the distance between two categories. Subsequent data is mapped into this space so their category can be predicted.

12.3.6.1. Exercise - apply a Support Vector Classifier#

Let’s try for a little better accuracy with a Support Vector Classifier.

1. Add a comma after the K-Neighbors item, and then add this line:

classifiers['SVC'] = SVC()

classify()

Accuracy (train) for Linear SVC: 80.2%

precision recall f1-score support

chinese 0.66 0.76 0.71 216

indian 0.90 0.90 0.90 249

japanese 0.81 0.76 0.78 243

korean 0.84 0.75 0.79 245

thai 0.81 0.84 0.82 246

accuracy 0.80 1199

macro avg 0.80 0.80 0.80 1199

weighted avg 0.81 0.80 0.80 1199

Accuracy (train) for KNN classifier: 76.1%

precision recall f1-score support

chinese 0.68 0.75 0.71 216

indian 0.84 0.83 0.84 249

japanese 0.69 0.85 0.76 243

korean 0.93 0.60 0.73 245

thai 0.74 0.78 0.76 246

accuracy 0.76 1199

macro avg 0.78 0.76 0.76 1199

weighted avg 0.78 0.76 0.76 1199

Accuracy (train) for SVC: 82.1%

precision recall f1-score support

chinese 0.70 0.76 0.73 216

indian 0.91 0.90 0.91 249

japanese 0.82 0.79 0.80 243

korean 0.87 0.79 0.83 245

thai 0.81 0.85 0.83 246

accuracy 0.82 1199

macro avg 0.82 0.82 0.82 1199

weighted avg 0.82 0.82 0.82 1199

The result is quite good!

See also

Learn about Support-Vectors

12.3.7. Ensemble Classifiers#

Let’s follow the path to the very end, even though the previous test was quite good. Let’s try some ‘Ensemble Classifiers, specifically Random Forest and AdaBoost:

classifiers['RFST'] = RandomForestClassifier(n_estimators=100)

classifiers['ADA'] = AdaBoostClassifier(n_estimators=100)

classify()

Accuracy (train) for Linear SVC: 80.2%

precision recall f1-score support

chinese 0.66 0.76 0.71 216

indian 0.90 0.90 0.90 249

japanese 0.81 0.76 0.78 243

korean 0.84 0.75 0.79 245

thai 0.81 0.84 0.82 246

accuracy 0.80 1199

macro avg 0.80 0.80 0.80 1199

weighted avg 0.81 0.80 0.80 1199

Accuracy (train) for KNN classifier: 76.1%

precision recall f1-score support

chinese 0.68 0.75 0.71 216

indian 0.84 0.83 0.84 249

japanese 0.69 0.85 0.76 243

korean 0.93 0.60 0.73 245

thai 0.74 0.78 0.76 246

accuracy 0.76 1199

macro avg 0.78 0.76 0.76 1199

weighted avg 0.78 0.76 0.76 1199

Accuracy (train) for SVC: 82.1%

precision recall f1-score support

chinese 0.70 0.76 0.73 216

indian 0.91 0.90 0.91 249

japanese 0.82 0.79 0.80 243

korean 0.87 0.79 0.83 245

thai 0.81 0.85 0.83 246

accuracy 0.82 1199

macro avg 0.82 0.82 0.82 1199

weighted avg 0.82 0.82 0.82 1199

Accuracy (train) for RFST: 84.7%

precision recall f1-score support

chinese 0.78 0.82 0.80 216

indian 0.92 0.92 0.92 249

japanese 0.86 0.80 0.83 243

korean 0.87 0.81 0.84 245

thai 0.81 0.88 0.84 246

accuracy 0.85 1199

macro avg 0.85 0.85 0.85 1199

weighted avg 0.85 0.85 0.85 1199

Accuracy (train) for ADA: 67.3%

precision recall f1-score support

chinese 0.55 0.26 0.36 216

indian 0.85 0.82 0.83 249

japanese 0.68 0.66 0.67 243

korean 0.55 0.83 0.66 245

thai 0.72 0.75 0.73 246

accuracy 0.67 1199

macro avg 0.67 0.66 0.65 1199

weighted avg 0.68 0.67 0.66 1199

The result is very good, especially for Random Forest.

See also

Learn about Ensemble Classifiers

This method of Machine Learning “combines the predictions of several base estimators” to improve the model’s quality. In our example, we used Random Trees and AdaBoost.

Random Forest, an averaging method, builds a ‘forest’ of ‘decision trees’ infused with randomness to avoid overfitting. The n_estimators parameter is set to the number of trees.

AdaBoost fits a classifier to a dataset and then fits copies of that classifier to the same dataset. It focuses on the weights of incorrectly classified items and adjusts the fit for the next classifier to correct.

12.3.8. Self Study#

There’s a lot of jargon in these sections, so take a minute to review this list of useful terminology!

12.3.9. Your turn! 🚀#

Each of these techniques has a large number of parameters that you can tweak. Research each one’s default parameters and think about what tweaking these parameters would mean for the model’s quality.

Assignment - Parameter play

12.3.10. Acknowledgments#

Thanks to Microsoft for creating the open-source course ML-For-Beginners. It inspires the majority of the content in this chapter.