Introduction to Gradient Boosting

# Install the necessary dependencies

import os

import sys

!{sys.executable} -m pip install --quiet pandas scikit-learn numpy matplotlib jupyterlab_myst ipython

16. Introduction to Gradient Boosting#

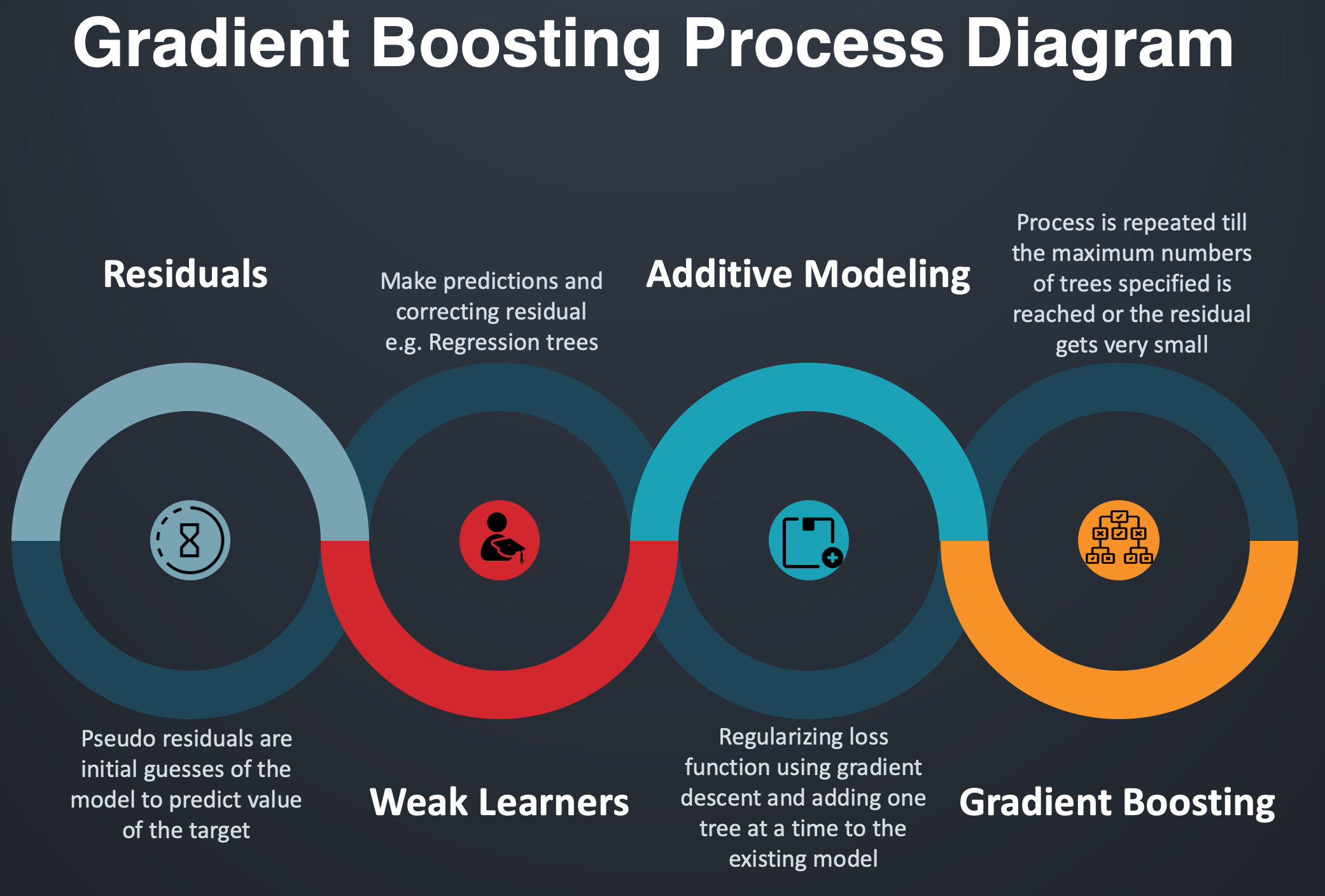

Gradient Boosting is a popular machine learning algorithm that is used for both regression and classification tasks. It is a type of ensemble method that combines multiple weak learners into a single strong learner by sequentially fitting new models to the errors made by previous models.

The idea behind gradient boosting is to use a loss function to measure the difference between the predicted and actual values, and then fit a new model to the residual errors. In each iteration, the model is trained on the errors made by the previous models, and the final prediction is obtained by summing the predictions of all models.

The main advantage of gradient boosting is that it can handle complex data patterns and can achieve high accuracy on a wide range of tasks. However, it is computationally expensive and can be sensitive to overfitting if not properly tuned.

In this section we will further explore gradient boosting.

Fig. 16.1 Gradient Boosting Process Diagram#