ML neural network - Assignment 1

Contents

42.52. ML neural network - Assignment 1#

In this notebook, we’re going to build a neural network using naught but pure numpy and your willpower. It’s going to be fun, I promise!

import numpy as np

np.random.seed(42)

Here goes our main class: a layer that can .forward() and .backward().

class Layer:

"""

A building block. Each layer is capable of performing two things:

- Process input to get output: output = layer.forward(input)

- Propagate gradients through itself: grad_input = layer.backward(input, grad_output)

Some layers also have learnable parameters which they update during layer.backward.

"""

def __init__(self):

"""Here you can initialize layer parameters (if any) and auxiliary stuff."""

# A dummy layer does nothing

pass

def forward(self, input):

"""

Takes input data of shape [batch, input_units], returns output data [batch, output_units]

"""

# A dummy layer just returns whatever it gets as input.

return input

def backward(self, input, grad_output):

"""

Performs a backpropagation step through the layer, with respect to the given input.

To compute loss gradients w.r.t input, you need to apply chain rule (backprop):

d loss / d x = (d loss / d layer) * (d layer / d x)

Luckily, you already receive d loss / d layer as input, so you only need to multiply it by d layer / d x.

If your layer has parameters (e.g. dense layer), you also need to update them here using d loss / d layer

"""

# The gradient of a dummy layer is precisely grad_output, but we'll write it more explicitly

num_units = input.shape[1]

d_layer_d_input = np.eye(num_units)

return np.dot(grad_output, d_layer_d_input) # chain rule

42.52.1. The road ahead#

We’re going to build a neural network that classifies MNIST digits. To do so, we’ll need a few building blocks:

Dense layer - a fully-connected layer, \(f(X)=X \cdot W + \vec{b}\)

ReLU layer (or any other nonlinearity you want)

Loss function - crossentropy

Backprop algorithm - a stochastic gradient descent with backpropageted gradients

Let’s approach them one at a time.

42.52.2. Nonlinearity layer#

This is the simplest layer you can get: it simply applies a nonlinearity to each element of your network.

class ReLU(Layer):

def __init__(self):

"""ReLU layer simply applies elementwise rectified linear unit to all inputs"""

pass

def forward(self, input):

"""Apply elementwise ReLU to [batch, input_units] matrix"""

output = np.maximum(0,input)

return output

def backward(self, input, grad_output):

"""Compute gradient of loss w.r.t. ReLU input"""

relu_grad = input > 0

return grad_output*relu_grad

42.52.3. Dense layer#

Now let’s build something more complicated. Unlike nonlinearity, a dense layer actually has something to learn.

A dense layer applies affine transformation. In a vectorized form, it can be described as: $\(f(X)= X \cdot W + \vec b \)$

Where

X is an object-feature matrix of shape [batch_size, num_features],

W is a weight matrix [num_features, num_outputs]

and b is a vector of num_outputs biases.

Both W and b are initialized during layer creation and updated each time backward is called.

class Dense(Layer):

def __init__(self, input_units, output_units, learning_rate=0.1):

"""

A dense layer is a layer which performs a learned affine transformation:

f(x) = <x*W> + b

"""

self.learning_rate = learning_rate

# initialize weights with small random numbers. We use normal initialization,

# but surely there is something better. Try this once you got it working: http://bit.ly/2vTlmaJ

self.weights = np.random.randn(input_units, output_units)*0.01

self.biases = np.zeros(output_units)

def forward(self,input):

"""

Perform an affine transformation:

f(x) = <x*W> + b

input shape: [batch, input_units]

output shape: [batch, output units]

"""

return np.dot(input, self.weights) + self.biases

def backward(self,input,grad_output):

# compute d f / d x = d f / d dense * d dense / d x

# where d dense/ d x = weights transposed

grad_input = np.dot(grad_output, self.weights.T)

# compute gradient w.r.t. weights and biases

grad_weights = np.dot(input.T,grad_output)/input.shape[0] #<your code here>

grad_biases = grad_output.mean(axis=0) #<your code here>

assert grad_weights.shape == self.weights.shape and grad_biases.shape == self.biases.shape

# Here we perform a stochastic gradient descent step.

# Later on, you can try replacing that with something better.

self.weights = self.weights - self.learning_rate * grad_weights

self.biases = self.biases - self.learning_rate * grad_biases

return grad_input

42.52.4. Testing the dense layer#

Here we have a few tests to make sure your dense layer works properly. You can just run them, get 3 “well done”s and forget they ever existed.

… or not get 3 “well done”s and go fix stuff. If that is the case, here are some tips for you:

Make sure you compute gradients for b as sum of gradients over batch, not mean over gradients. Grad_output is already divided by batch size.

If you’re debugging, try saving gradients in class fields, like “self.grad_w = grad_w” or print first 3-5 weights. This helps debugging.

If nothing else helps, try ignoring tests and proceed to network training. If it trains alright, you may be off by something that does not affect network training.

l = Dense(128, 150)

assert -0.05 < l.weights.mean() < 0.05 and 1e-3 < l.weights.std() < 1e-1,\

"The initial weights must have zero mean and small variance. "\

"If you know what you're doing, remove this assertion."

assert -0.05 < l.biases.mean() < 0.05, "Biases must be zero mean. Ignore if you have a reason to do otherwise."

# To test the outputs, we explicitly set weights with fixed values. DO NOT DO THAT IN ACTUAL NETWORK!

l = Dense(3,4)

x = np.linspace(-1,1,2*3).reshape([2,3])

l.weights = np.linspace(-1,1,3*4).reshape([3,4])

l.biases = np.linspace(-1,1,4)

assert np.allclose(l.forward(x),np.array([[ 0.07272727, 0.41212121, 0.75151515, 1.09090909],

[-0.90909091, 0.08484848, 1.07878788, 2.07272727]]))

print("Well done!")

Well done!

42.52.5. The loss function#

Since we want to predict probabilities, it would be logical for us to define softmax nonlinearity on top of our network and compute loss given predicted probabilities. However, there is a better way to do so.

If you write down the expression for crossentropy as a function of softmax logits (a), you’ll see:

If you take a closer look, ya’ll see that it can be rewritten as:

It’s called Log-softmax and it’s better than naive log(softmax(a)) in all aspects:

Better numerical stability

Easier to get derivative right

Marginally faster to compute

So why not just use log-softmax throughout our computation and never actually bother to estimate probabilities.

Here you are! We’ve defined the both loss functions for you so that you could focus on neural network part.

def softmax_crossentropy_with_logits(logits,reference_answers):

"""Compute crossentropy from logits[batch,n_classes] and ids of correct answers"""

logits_for_answers = logits[np.arange(len(logits)),reference_answers]

xentropy = - logits_for_answers + np.log(np.sum(np.exp(logits),axis=-1))

return xentropy

def grad_softmax_crossentropy_with_logits(logits,reference_answers):

"""Compute crossentropy gradient from logits[batch,n_classes] and ids of correct answers"""

ones_for_answers = np.zeros_like(logits)

ones_for_answers[np.arange(len(logits)),reference_answers] = 1

softmax = np.exp(logits) / np.exp(logits).sum(axis=-1,keepdims=True)

return (- ones_for_answers + softmax) / logits.shape[0]

42.52.6. Full network#

Now let’s combine what we’ve just built into a working neural network. As we announced, we’re gonna use this monster to classify handwritten digits, so let’s get them loaded.

import sys

import os

import pandas as pd

def load_dataset(flatten=False):

# We first define a download function, supporting both Python 2 and 3.

if sys.version_info[0] == 2:

from urllib import urlretrieve

else:

from urllib.request import urlretrieve

# We can now download and read the training and test set images and labels.

train_df = pd.read_csv("../../assets/data/mnist_train.csv")

test_df = pd.read_csv("../../assets/data/mnist_test.csv")

X_train = train_df.iloc[:6000, 1:].values

y_train = train_df.iloc[:6000, 0].values

X_test = test_df.iloc[:1000, 1:].values

y_test = test_df.iloc[:1000, 0].values

# We reserve the last 10000 training examples for validation.

X_train, X_val = X_train[:-1000], X_train[-1000:]

y_train, y_val = y_train[:-1000], y_train[-1000:]

if flatten:

X_train = X_train.reshape([-1, 28**2])

X_val = X_val.reshape([-1, 28**2])

X_test = X_test.reshape([-1, 28**2])

# We just return all the arrays in order, as expected in main().

# (It doesn't matter how we do this as long as we can read them again.)

return X_train, y_train, X_val, y_val, X_test, y_test

%matplotlib inline

import matplotlib.pyplot as plt

X_train, y_train, X_val, y_val, X_test, y_test = load_dataset(flatten=True)

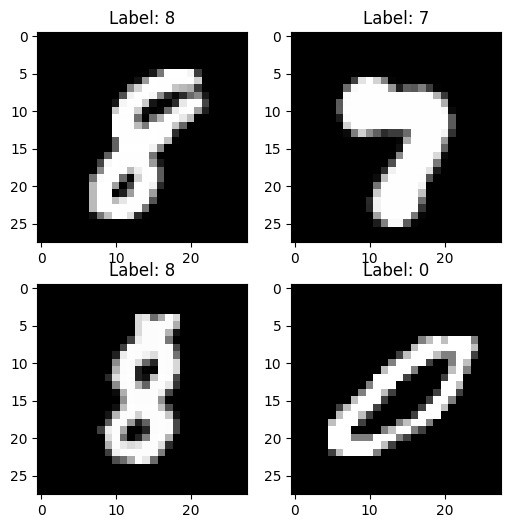

plt.figure(figsize=[6,6])

for i in range(4):

plt.subplot(2,2,i+1)

plt.title("Label: %i"%y_train[i])

plt.imshow(X_train[i].reshape([28,28]),cmap='gray');

X_train shape: (6000, 784)

y_train shape: (6000,)

X_train shape: (5000, 784)

y_train shape: (5000,)

X_test shape: (1000, 784)

y_test shape: (1000,)

X_val shape: (1000, 784)

y_val shape: (1000,)

We’ll define network as a list of layers, each applied on top of previous one. In this setting, computing predictions and training becomes trivial.

network = []

network.append(Dense(X_train.shape[1], 100))

network.append(ReLU())

network.append(Dense(100, 200))

network.append(ReLU())

network.append(Dense(200, 10))

def forward(network, X):

"""

Compute activations of all network layers by applying them sequentially.

Return a list of activations for each layer.

Make sure last activation corresponds to network logits.

"""

activations = []

input = X

for layer in network:

activations.append(layer.forward(input))

input = activations[-1]

assert len(activations) == len(network)

return activations

def predict(network, X):

"""

Use network to predict the most likely class for each sample.

"""

logits = forward(network, X)[-1]

return logits.argmax(axis=-1)

42.52.7. Backprop#

You can now define the backpropagation step for the neural network. Please read the docstring.

def train(network,X,y):

"""

Train your network on a given batch of X and y.

You first need to run forward to get all layer activations.

You can estimate loss and loss_grad, obtaining dL / dy_pred

Then you can run layer.backward going from last layer to first,

propagating the gradient of input to previous layers.

After you called backward for all layers, all Dense layers have already made one gradient step.

"""

# Get the layer activations

layer_activations = forward(network,X)

layer_inputs = [X] + layer_activations #layer_input[i] is an input for network[i]

logits = layer_activations[-1]

# Compute the loss and the initial gradient

loss = softmax_crossentropy_with_logits(logits,y)

loss_grad = grad_softmax_crossentropy_with_logits(logits,y)

# propagate gradients through network layers using .backward

# hint: start from last layer and move to earlier layers

for layer_i in range(len(network))[::-1]:

layer = network[layer_i]

loss_grad = layer.backward(layer_inputs[layer_i],loss_grad)

return np.mean(loss)

Instead of tests, we provide you with a training loop that prints training and validation accuracies on every epoch.

If your implementation of forward and backward are correct, your accuracy should grow from 90~93% to >97% with the default network.

42.52.8. Training loop#

As usual, we split data into minibatches, feed each such minibatch into the network and update weights.

from tqdm import trange

def iterate_minibatches(inputs, targets, batchsize, shuffle=False):

assert len(inputs) == len(targets)

if shuffle:

indices = np.random.permutation(len(inputs))

for start_idx in trange(0, len(inputs) - batchsize + 1, batchsize):

if shuffle:

excerpt = indices[start_idx:start_idx + batchsize]

else:

excerpt = slice(start_idx, start_idx + batchsize)

yield inputs[excerpt], targets[excerpt]

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[40], line 1

----> 1 from tqdm import trange

2 def iterate_minibatches(inputs, targets, batchsize, shuffle=False):

3 assert len(inputs) == len(targets)

ModuleNotFoundError: No module named 'tqdm'

from IPython.display import clear_output

train_log = []

val_log = []

for epoch in range(25):

for x_batch,y_batch in iterate_minibatches(X_train, y_train, batchsize=32, shuffle=True):

train(network, x_batch, y_batch)

train_log.append(np.mean(predict(network, X_train) == y_train))

val_log.append(np.mean(predict(network, X_val) == y_val))

clear_output()

print("Epoch",epoch)

print("Train accuracy:",train_log[-1])

print("Val accuracy:",val_log[-1])

plt.plot(train_log,label='train accuracy')

plt.plot(val_log,label='val accuracy')

plt.legend(loc='best')

plt.grid()

plt.show()

What should you see: train accuracy should increase to near-100%. Val accuracy will also increase, allbeit to a smaller value.

What else to try: You can try implementing different nonlinearities, dropout or composing neural network of more layers. See how this affects training speed, overfitting & final quality.

Good hunting!

42.52.9. Acknowledgments#

Thanks to yandexdataschool for creating the open-source course Practical_DL. It inspires the majority of the content in this assignment.