Implementing a GPT model from Scratch To Generate Text

Contents

41. Implementing a GPT model from Scratch To Generate Text#

from importlib.metadata import version

import matplotlib

import tiktoken

import torch

print("matplotlib version:", version("matplotlib"))

print("torch version:", version("torch"))

print("tiktoken version:", version("tiktoken"))

matplotlib version: 3.7.2

torch version: 2.2.1

tiktoken version: 0.5.1

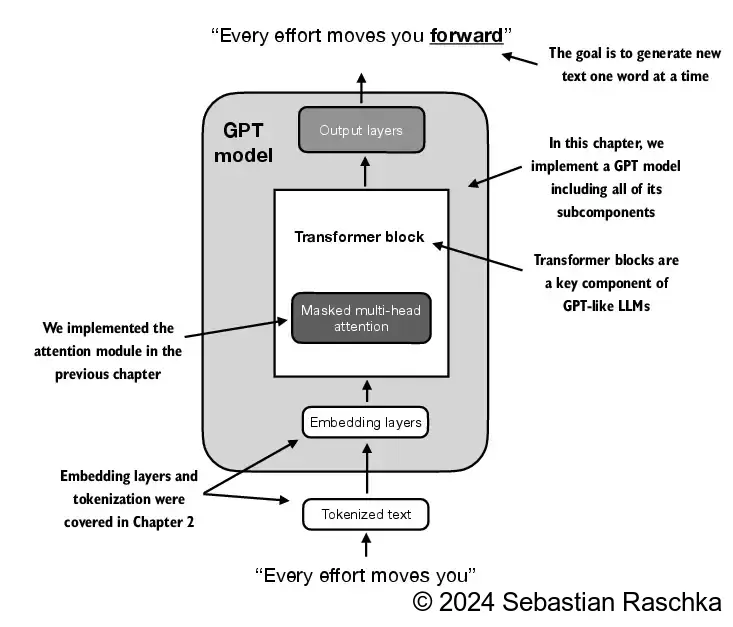

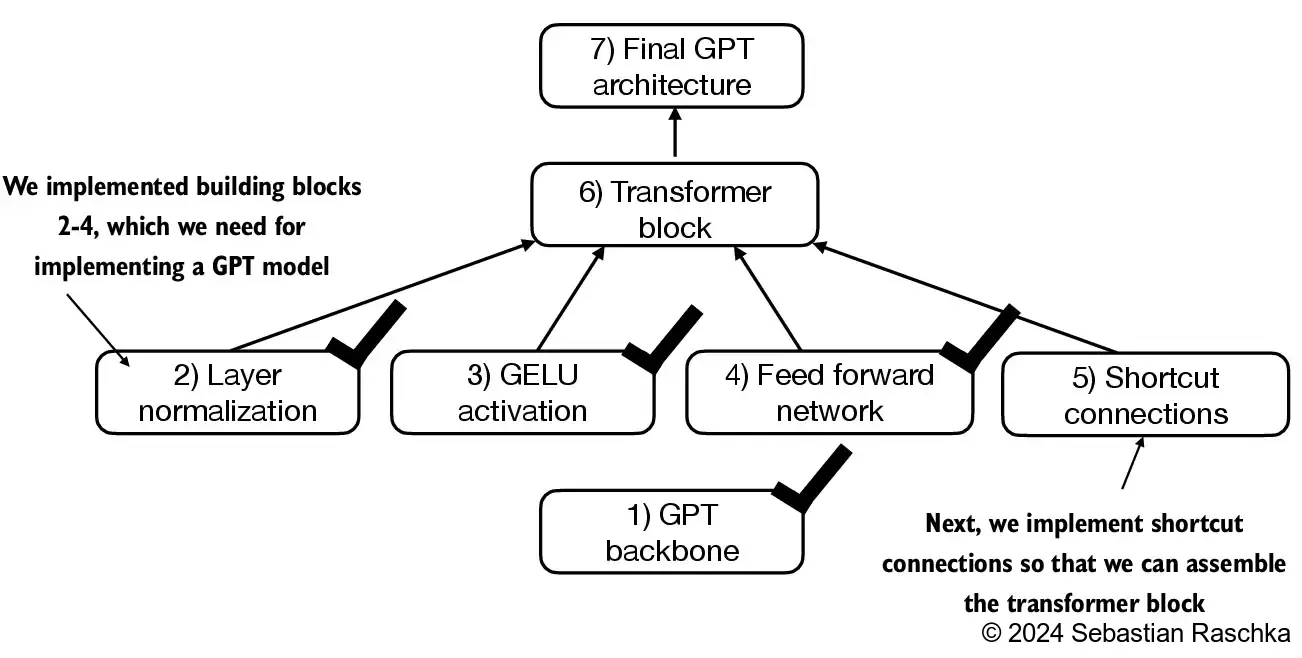

In this chapter, we implement a GPT-like LLM architecture; the next chapter will focus on training this LLM

41.1. Coding an LLM architecture#

Models like GPT and Llama generate words sequentially and are based on the decoder part of the original transformer architecture Therefore, these LLMs are often referred to as “decoder-like” LLMs Compared to conventional deep learning models, LLMs are larger, mainly due to their vast number of parameters, not the amount of code We’ll see that many elements are repeated in an LLM’s architecture

In this chapter, we consider embedding and model sizes akin to a small GPT-2 model We’ll specifically code the architecture of the smallest GPT-2 model (124 million parameters), as outlined in Radford et al.’s Language Models are Unsupervised Multitask Learners (note that the initial report lists it as 117M parameters, but this was later corrected in the model weight repository)

Configuration details for the 124 million parameter GPT-2 model include:

GPT_CONFIG_124M = {

"vocab_size": 50257, # Vocabulary size

"ctx_len": 1024, # Context length

"emb_dim": 768, # Embedding dimension

"n_heads": 12, # Number of attention heads

"n_layers": 12, # Number of layers

"drop_rate": 0.1, # Dropout rate

"qkv_bias": False # Query-Key-Value bias

}

We use short variable names to avoid long lines of code later

"vocab_size" indicates a vocabulary size of 50,257 words, supported by the BPE tokenizer discussed in Chapter 2

"ctx_len" represents the model’s maximum input token count, as enabled by positional embeddings covered in Chapter 2

"emb_dim" is the embedding size for token inputs, converting each input token into a 768-dimensional vector

"n_heads" is the number of attention heads in the multi-head attention mechanism implemented in Chapter 3

"n_layers" is the number of transformer blocks within the model, which we’ll implement in upcoming sections

"drop_rate" is the dropout mechanism’s intensity, discussed in Chapter 3; 0.1 means dropping 10% of hidden units during training to mitigate overfitting

"qkv_bias" decides if the Linear layers in the multi-head attention mechanism (from Chapter 3) should include a bias vector when computing query (Q), key (K), and value (V) tensors; we’ll disable this option, which is standard practice in modern LLMs; however, we’ll revisit this later when loading pretrained GPT-2 weights from OpenAI into our reimplementation in Chapter 6

import torch

import torch.nn as nn

class DummyGPTModel(nn.Module):

def __init__(self, cfg):

super().__init__()

self.tok_emb = nn.Embedding(cfg["vocab_size"], cfg["emb_dim"])

self.pos_emb = nn.Embedding(cfg["ctx_len"], cfg["emb_dim"])

self.drop_emb = nn.Dropout(cfg["drop_rate"])

# Use a placeholder for TransformerBlock

self.trf_blocks = nn.Sequential(

*[DummyTransformerBlock(cfg) for _ in range(cfg["n_layers"])])

# Use a placeholder for LayerNorm

self.final_norm = DummyLayerNorm(cfg["emb_dim"])

self.out_head = nn.Linear(

cfg["emb_dim"], cfg["vocab_size"], bias=False

)

def forward(self, in_idx):

batch_size, seq_len = in_idx.shape

tok_embeds = self.tok_emb(in_idx)

pos_embeds = self.pos_emb(torch.arange(seq_len, device=in_idx.device))

x = tok_embeds + pos_embeds

x = self.drop_emb(x)

x = self.trf_blocks(x)

x = self.final_norm(x)

logits = self.out_head(x)

return logits

class DummyTransformerBlock(nn.Module):

def __init__(self, cfg):

super().__init__()

# A simple placeholder

def forward(self, x):

# This block does nothing and just returns its input.

return x

class DummyLayerNorm(nn.Module):

def __init__(self, normalized_shape, eps=1e-5):

super().__init__()

# The parameters here are just to mimic the LayerNorm interface.

def forward(self, x):

# This layer does nothing and just returns its input.

return x

import tiktoken

tokenizer = tiktoken.get_encoding("gpt2")

batch = []

txt1 = "Every effort moves you"

txt2 = "Every day holds a"

batch.append(torch.tensor(tokenizer.encode(txt1)))

batch.append(torch.tensor(tokenizer.encode(txt2)))

batch = torch.stack(batch, dim=0)

print(batch)

tensor([[6109, 3626, 6100, 345],

[6109, 1110, 6622, 257]])

torch.manual_seed(123)

model = DummyGPTModel(GPT_CONFIG_124M)

logits = model(batch)

print("Output shape:", logits.shape)

print(logits)

Output shape: torch.Size([2, 4, 50257])

tensor([[[-1.2034, 0.3201, -0.7130, ..., -1.5548, -0.2390, -0.4667],

[-0.1192, 0.4539, -0.4432, ..., 0.2392, 1.3469, 1.2430],

[ 0.5307, 1.6720, -0.4695, ..., 1.1966, 0.0111, 0.5835],

[ 0.0139, 1.6755, -0.3388, ..., 1.1586, -0.0435, -1.0400]],

[[-1.0908, 0.1798, -0.9484, ..., -1.6047, 0.2439, -0.4530],

[-0.7860, 0.5581, -0.0610, ..., 0.4835, -0.0077, 1.6621],

[ 0.3567, 1.2698, -0.6398, ..., -0.0162, -0.1296, 0.3717],

[-0.2407, -0.7349, -0.5102, ..., 2.0057, -0.3694, 0.1814]]],

grad_fn=<UnsafeViewBackward0>)

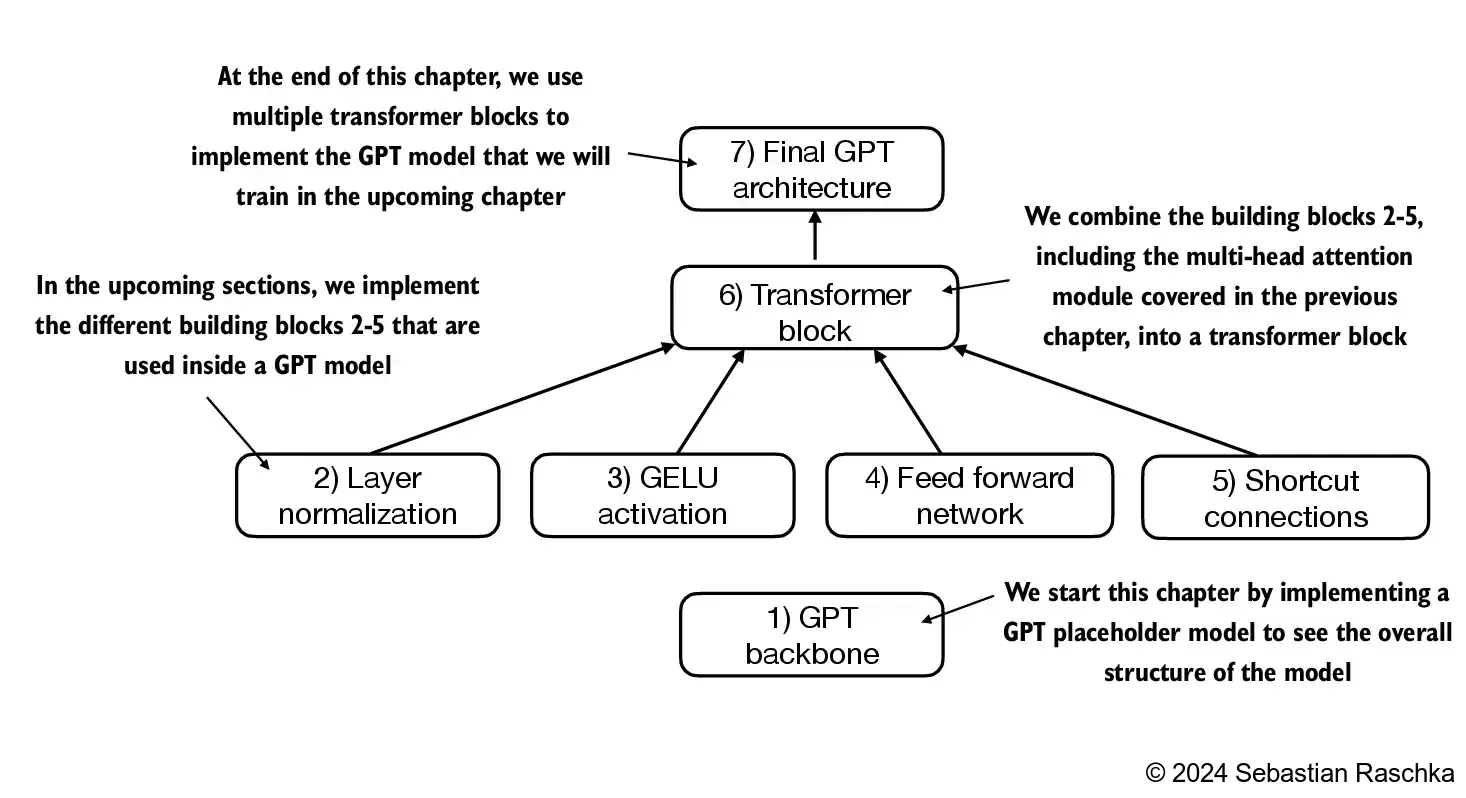

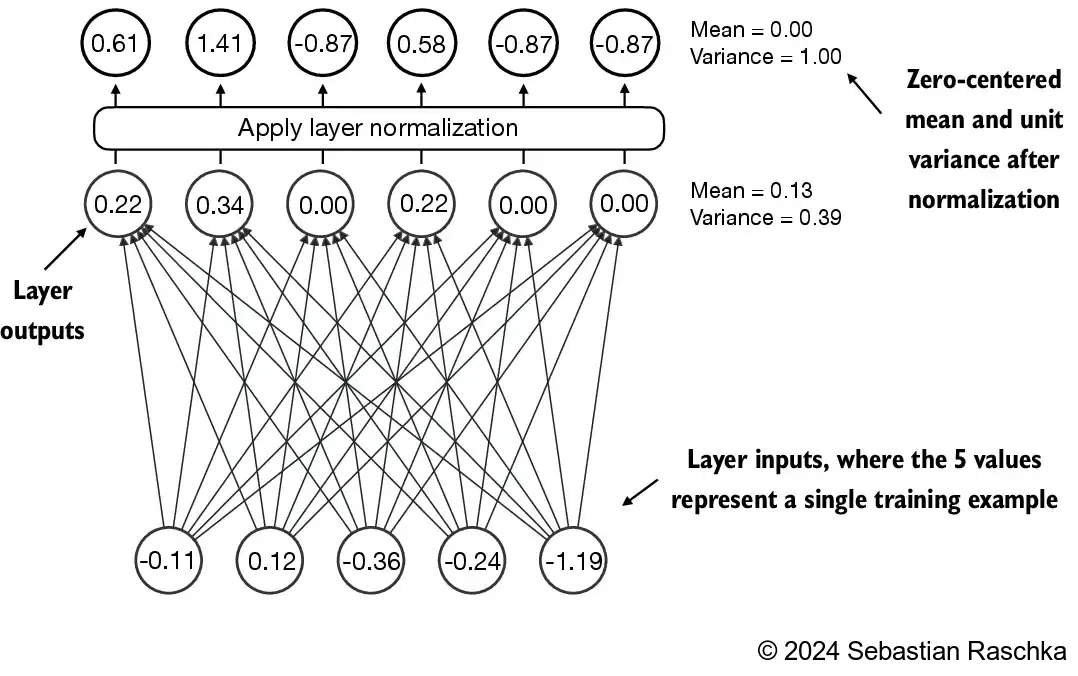

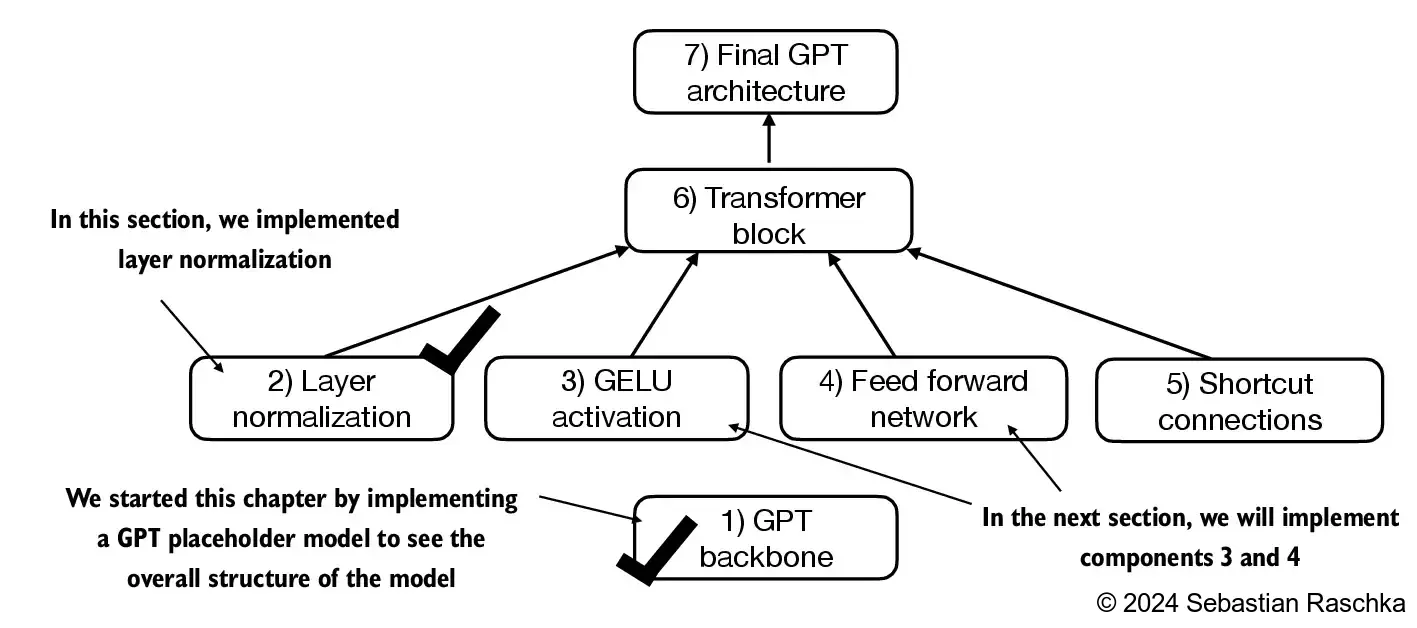

41.2. Normalizing activations with layer normalization#

Layer normalization, also known as LayerNorm (Ba et al. 2016), centers the activations of a neural network layer around a mean of 0 and normalizes their variance to 1 This stabilizes training and enables faster convergence to effective weights Layer normalization is applied both before and after the multi-head attention module within the transformer block, which we will implement later; it’s also applied before the final output layer

Let’s see how layer normalization works by passing a small input sample through a simple neural network layer:

torch.manual_seed(123)

# create 2 training examples with 5 dimensions (features) each

batch_example = torch.randn(2, 5)

layer = nn.Sequential(nn.Linear(5, 6), nn.ReLU())

out = layer(batch_example)

print(out)

tensor([[0.2260, 0.3470, 0.0000, 0.2216, 0.0000, 0.0000],

[0.2133, 0.2394, 0.0000, 0.5198, 0.3297, 0.0000]],

grad_fn=<ReluBackward0>)

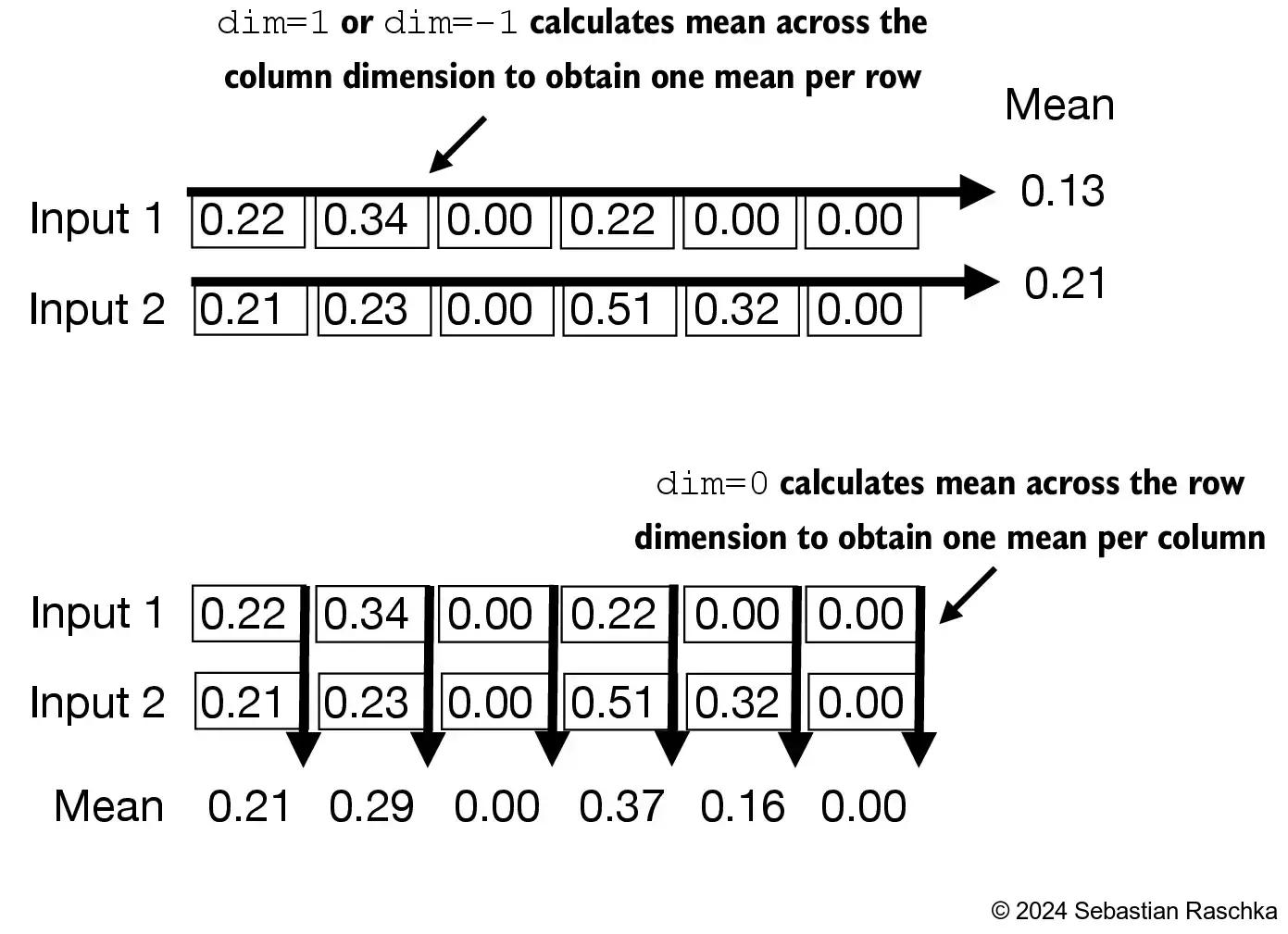

Let’s compute the mean and variance for each of the 2 inputs above:

mean = out.mean(dim=-1, keepdim=True)

var = out.var(dim=-1, keepdim=True)

print("Mean:\n", mean)

print("Variance:\n", var)

Mean:

tensor([[0.1324],

[0.2170]], grad_fn=<MeanBackward1>)

Variance:

tensor([[0.0231],

[0.0398]], grad_fn=<VarBackward0>)

The normalization is applied to each of the two inputs (rows) independently; using dim=-1 applies the calculation across the last dimension (in this case, the feature dimension) instead of the row dimension

Subtracting the mean and dividing by the square-root of the variance (standard deviation) centers the inputs to have a mean of 0 and a variance of 1 across the column (feature) dimension:

out_norm = (out - mean) / torch.sqrt(var)

print("Normalized layer outputs:\n", out_norm)

mean = out_norm.mean(dim=-1, keepdim=True)

var = out_norm.var(dim=-1, keepdim=True)

print("Mean:\n", mean)

print("Variance:\n", var)

Normalized layer outputs:

tensor([[ 0.6159, 1.4126, -0.8719, 0.5872, -0.8719, -0.8719],

[-0.0189, 0.1121, -1.0876, 1.5173, 0.5647, -1.0876]],

grad_fn=<DivBackward0>)

Mean:

tensor([[2.9802e-08],

[3.9736e-08]], grad_fn=<MeanBackward1>)

Variance:

tensor([[1.],

[1.]], grad_fn=<VarBackward0>)

Each input is centered at 0 and has a unit variance of 1; to improve readability, we can disable PyTorch’s scientific notation:

torch.set_printoptions(sci_mode=False)

print("Mean:\n", mean)

print("Variance:\n", var)

Mean:

tensor([[ 0.0000],

[ 0.0000]], grad_fn=<MeanBackward1>)

Variance:

tensor([[1.],

[1.]], grad_fn=<VarBackward0>)

Above, we normalized the features of each input

Now, using the same idea, we can implement a LayerNorm class:

class LayerNorm(nn.Module):

def __init__(self, emb_dim):

super().__init__()

self.eps = 1e-5

self.scale = nn.Parameter(torch.ones(emb_dim))

self.shift = nn.Parameter(torch.zeros(emb_dim))

def forward(self, x):

mean = x.mean(dim=-1, keepdim=True)

var = x.var(dim=-1, keepdim=True, unbiased=False)

norm_x = (x - mean) / torch.sqrt(var + self.eps)

return self.scale * norm_x + self.shift

Scale and shift

Note that in addition to performing the normalization by subtracting the mean and dividing by the variance, we added two trainable parameters, a scale and a shift parameter

The initial scale (multiplying by 1) and shift (adding 0) values don’t have any effect; however, scale and shift are trainable parameters that the LLM automatically adjusts during training if it is determined that doing so would improve the model’s performance on its training task

This allows the model to learn appropriate scaling and shifting that best suit the data it is processing

Note that we also add a smaller value (eps) before computing the square root of the variance; this is to avoid division-by-zero errors if the variance is 0

Biased variance

In the variance calculation above, setting unbiased=False means using the formula \(\frac{\sum_i (x_i \bar{x})^2}{n}\) to compute the variance where n is the sample size (here, the number of features or columns); this formula does not include Bessel’s correction (which uses n-1 in the denominator), thus providing a biased estimate of the variance

For LLMs, where the embedding dimension n is very large, the difference between using n and n-1

is negligible

However, GPT-2 was trained with a biased variance in the normalization layers, which is why we also adopted this setting for compatibility reasons with the pretrained weights that we will load in later chapters

Let’s now try out LayerNorm in practice:

ln = LayerNorm(emb_dim=5)

out_ln = ln(batch_example)

mean = out_ln.mean(dim=-1, keepdim=True)

var = out_ln.var(dim=-1, unbiased=False, keepdim=True)

print("Mean:\n", mean)

print("Variance:\n", var)

Mean:

tensor([[ -0.0000],

[ 0.0000]], grad_fn=<MeanBackward1>)

Variance:

tensor([[1.0000],

[1.0000]], grad_fn=<VarBackward0>)

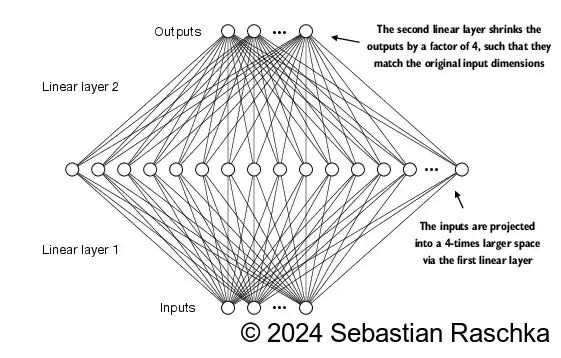

41.3. Implementing a feed forward network with GELU activations#

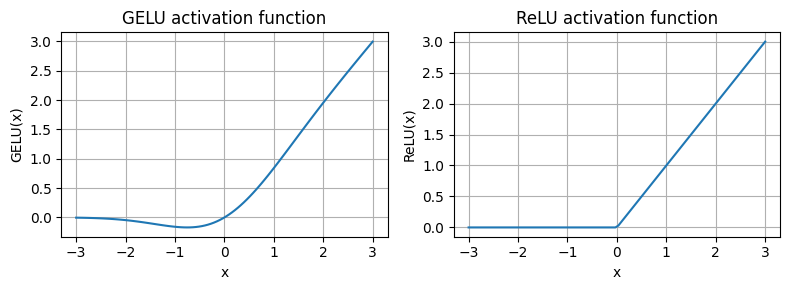

In this section, we implement a small neural network submodule that is used as part of the transformer block in LLMs We start with the activation function In deep learning, ReLU (Rectified Linear Unit) activation functions are commonly used due to their simplicity and effectiveness in various neural network architectures In LLMs, various other types of activation functions are used beyond the traditional ReLU; two notable examples are GELU (Gaussian Error Linear Unit) and SwiGLU (Sigmoid-Weighted Linear Unit) GELU and SwiGLU are more complex, smooth activation functions incorporating Gaussian and sigmoid-gated linear units, respectively, offering better performance for deep learning models, unlike the simpler, piecewise linear function of ReLU

GELU (Hendrycks and Gimpel 2016) can be implemented in several ways; the exact version is defined as GELU(x)=x⋅Φ(x), where Φ(x) is the cumulative distribution function of the standard Gaussian distribution. In practice, it’s common to implement a computationally cheaper approximation: \(\text{GELU}(x) \approx 0.5 \cdot x \cdot \left(1 + \tanh\left[\sqrt{\frac{2}{\pi}} \cdot \left(x + 0.044715 \cdot x^3\right)\right]\right) \) (the original GPT-2 model was also trained with this approximation)

class GELU(nn.Module):

def __init__(self):

super().__init__()

def forward(self, x):

return 0.5 * x * (1 + torch.tanh(

torch.sqrt(torch.tensor(2.0 / torch.pi)) *

(x + 0.044715 * torch.pow(x, 3))

))

import matplotlib.pyplot as plt

gelu, relu = GELU(), nn.ReLU()

# Some sample data

x = torch.linspace(-3, 3, 100)

y_gelu, y_relu = gelu(x), relu(x)

plt.figure(figsize=(8, 3))

for i, (y, label) in enumerate(zip([y_gelu, y_relu], ["GELU", "ReLU"]), 1):

plt.subplot(1, 2, i)

plt.plot(x, y)

plt.title(f"{label} activation function")

plt.xlabel("x")

plt.ylabel(f"{label}(x)")

plt.grid(True)

plt.tight_layout()

plt.show()

As we can see, ReLU is a piecewise linear function that outputs the input directly if it is positive; otherwise, it outputs zero GELU is a smooth, non-linear function that approximates ReLU but with a non-zero gradient for negative values

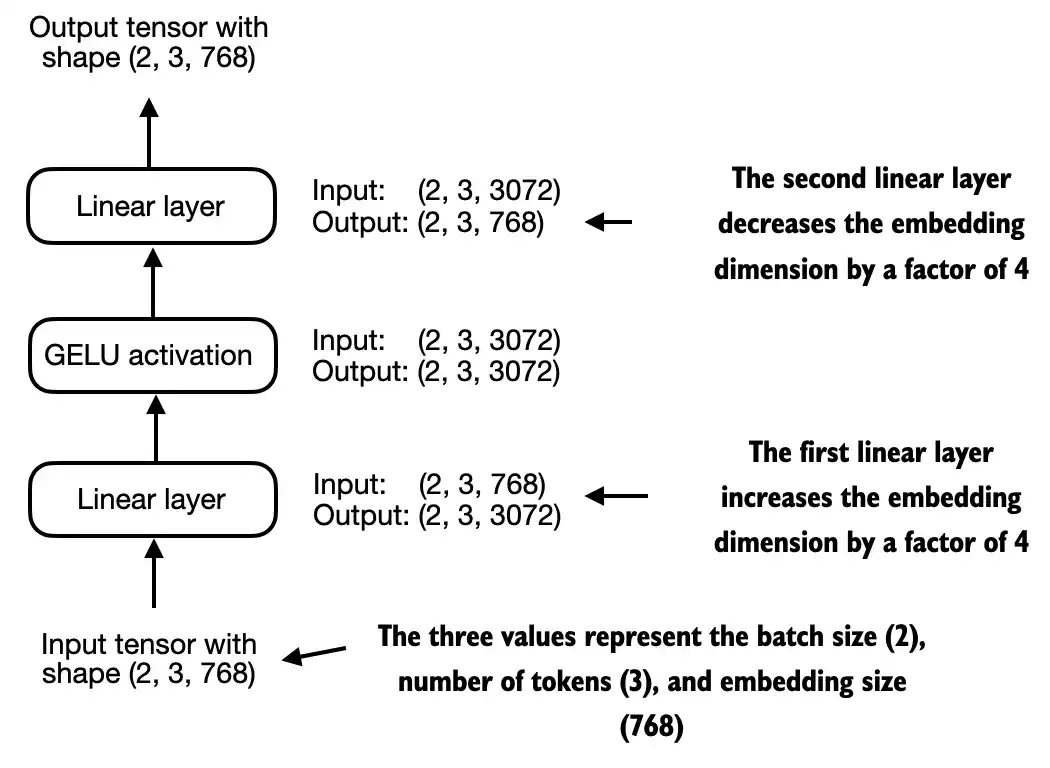

Next, let’s implement the small neural network module, FeedForward, that we will be using in the LLM’s transformer block later:

class FeedForward(nn.Module):

def __init__(self, cfg):

super().__init__()

self.layers = nn.Sequential(

nn.Linear(cfg["emb_dim"], 4 * cfg["emb_dim"]),

GELU(),

nn.Linear(4 * cfg["emb_dim"], cfg["emb_dim"]),

nn.Dropout(cfg["drop_rate"])

)

def forward(self, x):

return self.layers(x)

print(GPT_CONFIG_124M["emb_dim"])

768

ffn = FeedForward(GPT_CONFIG_124M)

# input shape: [batch_size, num_token, emb_size]

x = torch.rand(2, 3, 768)

out = ffn(x)

print(out.shape)

torch.Size([2, 3, 768])

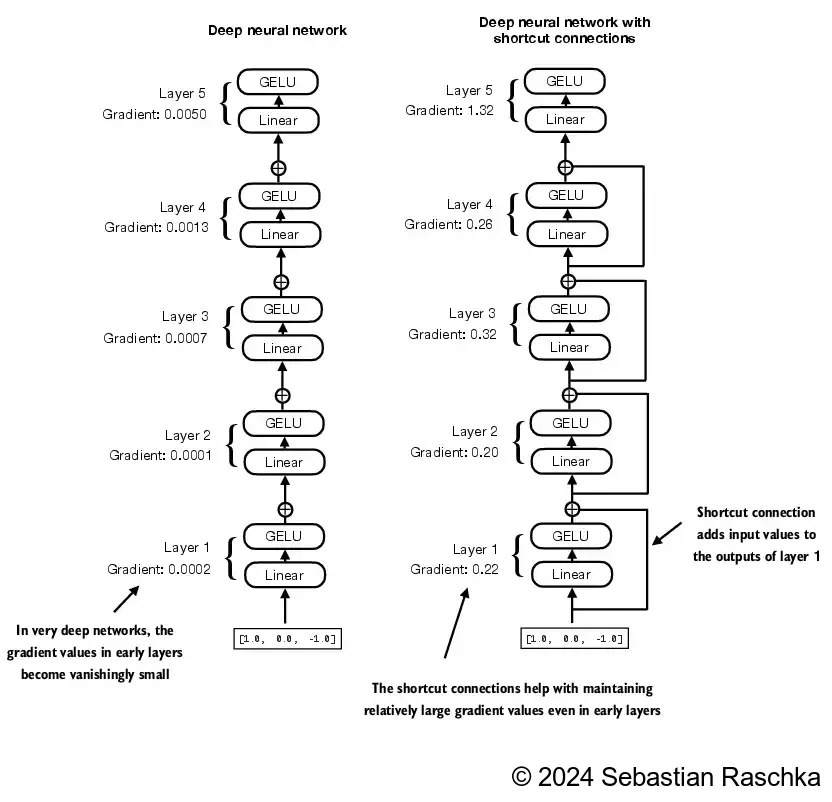

41.4. Adding shortcut connections#

Next, let’s talk about the concept behind shortcut connections, also called skip or residual connections Originally, shortcut connections were proposed in deep networks for computer vision (residual networks) to mitigate vanishing gradient problems A shortcut connection creates an alternative shorter path for the gradient to flow through the network This is achieved by adding the output of one layer to the output of a later layer, usually skipping one or more layers in between Let’s illustrate this idea with a small example network:

In code, it looks like this:

class ExampleDeepNeuralNetwork(nn.Module):

def __init__(self, layer_sizes, use_shortcut):

super().__init__()

self.use_shortcut = use_shortcut

self.layers = nn.ModuleList([

nn.Sequential(nn.Linear(layer_sizes[0], layer_sizes[1]), GELU()),

nn.Sequential(nn.Linear(layer_sizes[1], layer_sizes[2]), GELU()),

nn.Sequential(nn.Linear(layer_sizes[2], layer_sizes[3]), GELU()),

nn.Sequential(nn.Linear(layer_sizes[3], layer_sizes[4]), GELU()),

nn.Sequential(nn.Linear(layer_sizes[4], layer_sizes[5]), GELU())

])

def forward(self, x):

for layer in self.layers:

# Compute the output of the current layer

layer_output = layer(x)

# Check if shortcut can be applied

if self.use_shortcut and x.shape == layer_output.shape:

x = x + layer_output

else:

x = layer_output

return x

def print_gradients(model, x):

# Forward pass

output = model(x)

target = torch.tensor([[0.]])

# Calculate loss based on how close the target

# and output are

loss = nn.MSELoss()

loss = loss(output, target)

# Backward pass to calculate the gradients

loss.backward()

for name, param in model.named_parameters():

if 'weight' in name:

# Print the mean absolute gradient of the weights

print(f"{name} has gradient mean of {param.grad.abs().mean().item()}")

Let’s print the gradient values first without shortcut connections:

layer_sizes = [3, 3, 3, 3, 3, 1]

sample_input = torch.tensor([[1., 0., -1.]])

torch.manual_seed(123)

model_without_shortcut = ExampleDeepNeuralNetwork(

layer_sizes, use_shortcut=False

)

print_gradients(model_without_shortcut, sample_input)

layers.0.0.weight has gradient mean of 0.00020173587836325169

layers.1.0.weight has gradient mean of 0.0001201116101583466

layers.2.0.weight has gradient mean of 0.0007152041653171182

layers.3.0.weight has gradient mean of 0.001398873864673078

layers.4.0.weight has gradient mean of 0.005049646366387606

Next, let’s print the gradient values with shortcut connections:

torch.manual_seed(123)

model_with_shortcut = ExampleDeepNeuralNetwork(

layer_sizes, use_shortcut=True

)

print_gradients(model_with_shortcut, sample_input)

layers.0.0.weight has gradient mean of 0.22169792652130127

layers.1.0.weight has gradient mean of 0.20694105327129364

layers.2.0.weight has gradient mean of 0.32896995544433594

layers.3.0.weight has gradient mean of 0.2665732502937317

layers.4.0.weight has gradient mean of 1.3258541822433472

As we can see based on the output above, shortcut connections prevent the gradients from vanishing in the early layers (towards layer.0)

We will use this concept of a shortcut connection next when we implement a transformer block

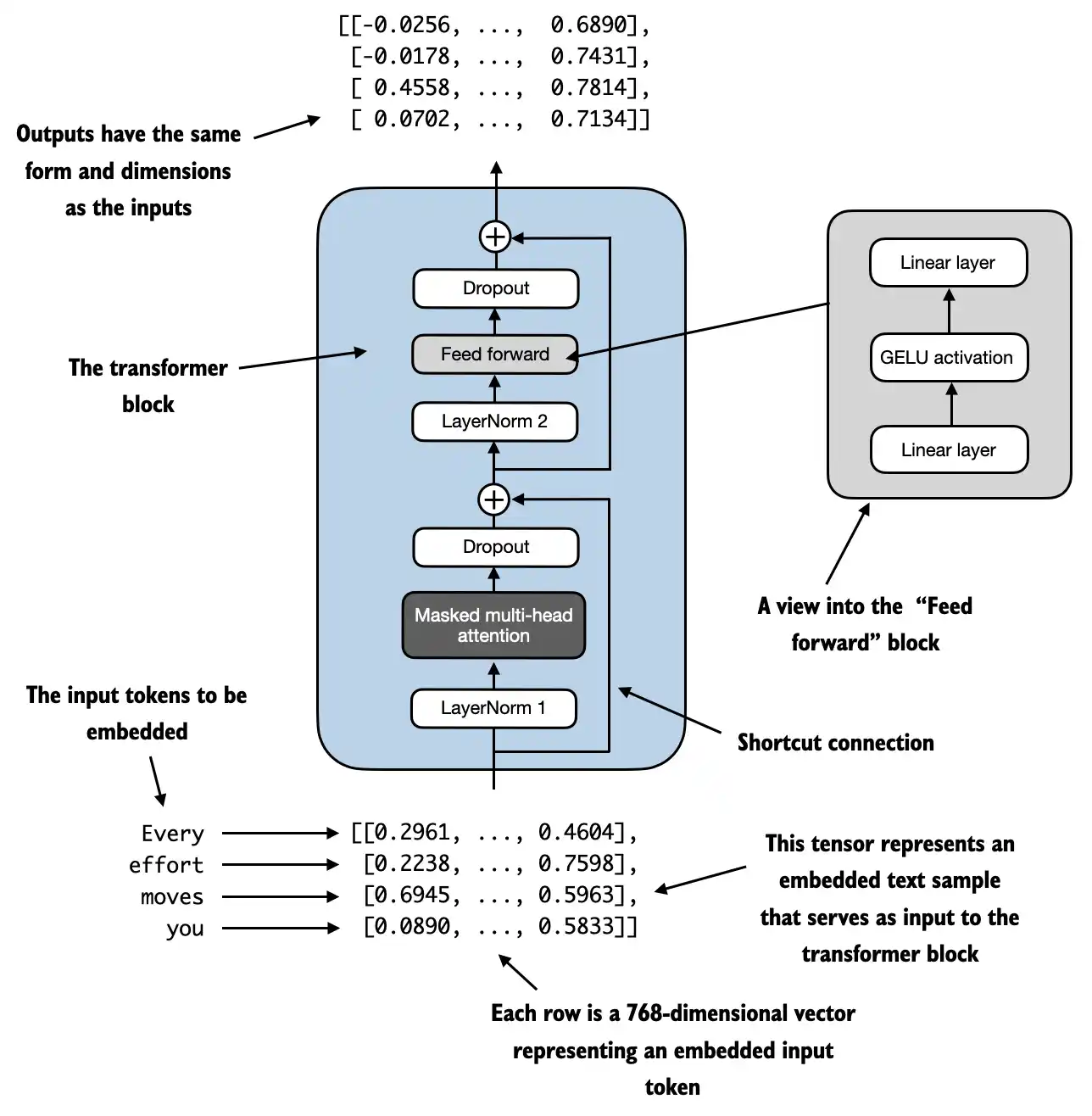

41.5. Connecting attention and linear layers in a transformer block#

In this section, we now combine the previous concepts into a so-called transformer block A transformer block combines the causal multi-head attention module with the linear layers, the feed forward neural network In addition, the transformer block also uses dropout and shortcut connections

# Some necessary functions

import tiktoken

import torch

import torch.nn as nn

from torch.utils.data import Dataset, DataLoader

class GPTDatasetV1(Dataset):

def __init__(self, txt, tokenizer, max_length, stride):

self.tokenizer = tokenizer

self.input_ids = []

self.target_ids = []

# Tokenize the entire text

token_ids = tokenizer.encode(txt)

# Use a sliding window to chunk the book into overlapping sequences of max_length

for i in range(0, len(token_ids) - max_length, stride):

input_chunk = token_ids[i:i + max_length]

target_chunk = token_ids[i + 1: i + max_length + 1]

self.input_ids.append(torch.tensor(input_chunk))

self.target_ids.append(torch.tensor(target_chunk))

def __len__(self):

return len(self.input_ids)

def __getitem__(self, idx):

return self.input_ids[idx], self.target_ids[idx]

def create_dataloader_v1(txt, batch_size=4, max_length=256,

stride=128, shuffle=True, drop_last=True):

# Initialize the tokenizer

tokenizer = tiktoken.get_encoding("gpt2")

# Create dataset

dataset = GPTDatasetV1(txt, tokenizer, max_length, stride)

# Create dataloader

dataloader = DataLoader(

dataset, batch_size=batch_size, shuffle=shuffle, drop_last=drop_last)

return dataloader

class MultiHeadAttention(nn.Module):

def __init__(self, d_in, d_out, block_size, dropout, num_heads, qkv_bias=False):

super().__init__()

assert d_out % num_heads == 0, "d_out must be divisible by num_heads"

self.d_out = d_out

self.num_heads = num_heads

self.head_dim = d_out // num_heads # Reduce the projection dim to match desired output dim

self.W_query = nn.Linear(d_in, d_out, bias=qkv_bias)

self.W_key = nn.Linear(d_in, d_out, bias=qkv_bias)

self.W_value = nn.Linear(d_in, d_out, bias=qkv_bias)

self.out_proj = nn.Linear(d_out, d_out) # Linear layer to combine head outputs

self.dropout = nn.Dropout(dropout)

self.register_buffer('mask', torch.triu(torch.ones(block_size, block_size), diagonal=1))

def forward(self, x):

b, num_tokens, d_in = x.shape

keys = self.W_key(x) # Shape: (b, num_tokens, d_out)

queries = self.W_query(x)

values = self.W_value(x)

# We implicitly split the matrix by adding a `num_heads` dimension

# Unroll last dim: (b, num_tokens, d_out) -> (b, num_tokens, num_heads, head_dim)

keys = keys.view(b, num_tokens, self.num_heads, self.head_dim)

values = values.view(b, num_tokens, self.num_heads, self.head_dim)

queries = queries.view(b, num_tokens, self.num_heads, self.head_dim)

# Transpose: (b, num_tokens, num_heads, head_dim) -> (b, num_heads, num_tokens, head_dim)

keys = keys.transpose(1, 2)

queries = queries.transpose(1, 2)

values = values.transpose(1, 2)

# Compute scaled dot-product attention (aka self-attention) with a causal mask

attn_scores = queries @ keys.transpose(2, 3) # Dot product for each head

# Original mask truncated to the number of tokens and converted to boolean

mask_bool = self.mask.bool()[:num_tokens, :num_tokens]

# Use the mask to fill attention scores

attn_scores.masked_fill_(mask_bool, -torch.inf)

attn_weights = torch.softmax(attn_scores / keys.shape[-1]**0.5, dim=-1)

attn_weights = self.dropout(attn_weights)

# Shape: (b, num_tokens, num_heads, head_dim)

context_vec = (attn_weights @ values).transpose(1, 2)

# Combine heads, where self.d_out = self.num_heads * self.head_dim

context_vec = context_vec.contiguous().view(b, num_tokens, self.d_out)

context_vec = self.out_proj(context_vec) # optional projection

return context_vec

class TransformerBlock(nn.Module):

def __init__(self, cfg):

super().__init__()

self.att = MultiHeadAttention(

d_in=cfg["emb_dim"],

d_out=cfg["emb_dim"],

block_size=cfg["ctx_len"],

num_heads=cfg["n_heads"],

dropout=cfg["drop_rate"],

qkv_bias=cfg["qkv_bias"])

self.ff = FeedForward(cfg)

self.norm1 = LayerNorm(cfg["emb_dim"])

self.norm2 = LayerNorm(cfg["emb_dim"])

self.drop_resid = nn.Dropout(cfg["drop_rate"])

def forward(self, x):

# Shortcut connection for attention block

shortcut = x

x = self.norm1(x)

x = self.att(x) # Shape [batch_size, num_tokens, emb_size]

x = self.drop_resid(x)

x = x + shortcut # Add the original input back

# Shortcut connection for feed forward block

shortcut = x

x = self.norm2(x)

x = self.ff(x)

x = self.drop_resid(x)

x = x + shortcut # Add the original input back

return x

Suppose we have 2 input samples with 6 tokens each, where each token is a 768-dimensional embedding vector; then this transformer block applies self-attention, followed by linear layers, to produce an output of similar size You can think of the output as an augmented version of the context vectors we discussed in the previous chapter

torch.manual_seed(123)

x = torch.rand(2, 4, 768) # Shape: [batch_size, num_tokens, emb_dim]

block = TransformerBlock(GPT_CONFIG_124M)

output = block(x)

print("Input shape:", x.shape)

print("Output shape:", output.shape)

Input shape: torch.Size([2, 4, 768])

Output shape: torch.Size([2, 4, 768])

41.6. Coding the GPT model#

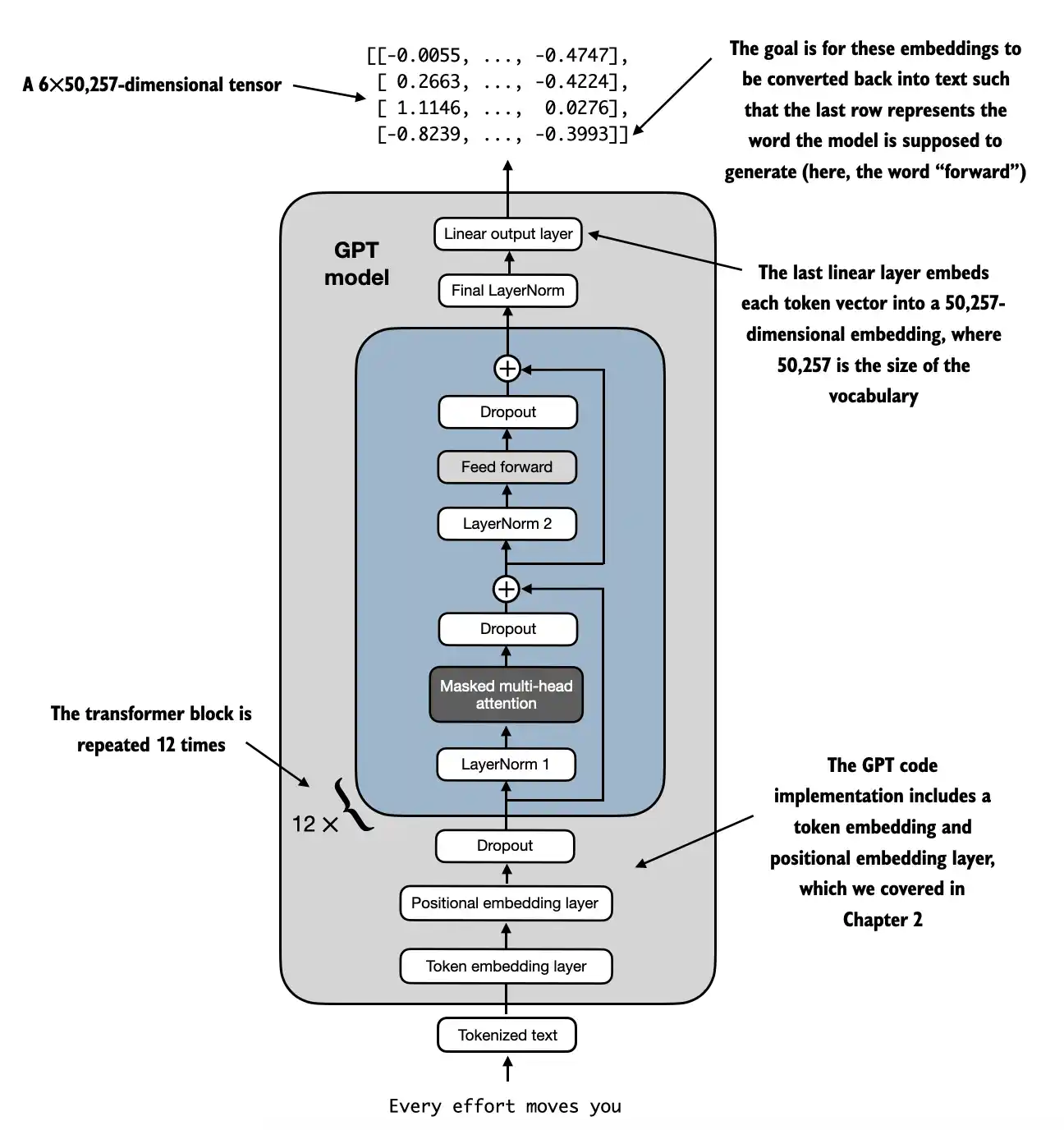

We are almost there: now let’s plug in the transformer block into the architecture we coded at the very beginning of this chapter so that we obtain a useable GPT architecture Note that the transformer block is repeated multiple times; in the case of the smallest 124M GPT-2 model, we repeat it 12 times:

The corresponding code implementation, where cfg["n_layers"] = 12:

class GPTModel(nn.Module):

def __init__(self, cfg):

super().__init__()

self.tok_emb = nn.Embedding(cfg["vocab_size"], cfg["emb_dim"])

self.pos_emb = nn.Embedding(cfg["ctx_len"], cfg["emb_dim"])

self.drop_emb = nn.Dropout(cfg["drop_rate"])

self.trf_blocks = nn.Sequential(

*[TransformerBlock(cfg) for _ in range(cfg["n_layers"])])

self.final_norm = LayerNorm(cfg["emb_dim"])

self.out_head = nn.Linear(

cfg["emb_dim"], cfg["vocab_size"], bias=False

)

def forward(self, in_idx):

batch_size, seq_len = in_idx.shape

tok_embeds = self.tok_emb(in_idx)

pos_embeds = self.pos_emb(torch.arange(seq_len, device=in_idx.device))

x = tok_embeds + pos_embeds # Shape [batch_size, num_tokens, emb_size]

x = self.drop_emb(x)

x = self.trf_blocks(x)

x = self.final_norm(x)

logits = self.out_head(x)

return logits

Using the configuration of the 124M parameter model, we can now instantiate this GPT model with random initial weights as follows:

torch.manual_seed(123)

model = GPTModel(GPT_CONFIG_124M)

out = model(batch)

print("Input batch:\n", batch)

print("\nOutput shape:", out.shape)

print(out)

Input batch:

tensor([[6109, 3626, 6100, 345],

[6109, 1110, 6622, 257]])

Output shape: torch.Size([2, 4, 50257])

tensor([[[ 0.6525, 0.5753, 0.0174, ..., 0.2988, 0.1441, 0.0032],

[ 0.0839, -0.6789, -0.6605, ..., -0.2912, 0.4267, -0.2696],

[ 0.8440, 0.1894, 0.0708, ..., 0.0982, -0.2183, 0.0920],

[-0.7958, 0.5066, 0.0209, ..., 0.7497, 0.3233, -0.1251]],

[[ 0.0181, 0.2606, -0.3022, ..., 0.2940, 0.1998, -0.6246],

[ 0.0596, 0.3041, -0.0293, ..., 0.6796, -0.1226, 0.1303],

[ 1.1895, 1.0891, 0.0237, ..., 0.8299, 0.1794, -0.2250],

[ 0.5457, 0.1861, 0.3872, ..., 1.3537, -0.4062, -0.0268]]],

grad_fn=<UnsafeViewBackward0>)

We will train this model in the next chapter However, a quick note about its size: we previously referred to it as a 124M parameter model; we can double check this number as follows:

total_params = sum(p.numel() for p in model.parameters())

print(f"Total number of parameters: {total_params:,}")

Total number of parameters: 163,009,536

As we see above, this model has 163M, not 124M parameters; why?

In the original GPT-2 paper, the researchers applied weight tying, which means that they reused the token embedding layer (tok_emb) as the output layer, which means setting self.out_head.weight = self.tok_emb.weight

The token embedding layer projects the 50,257-dimensional one-hot encoded input tokens to a 768-dimensional embedding representation

The output layer projects 768-dimensional embeddings back into a 50,257-dimensional representation so that we can convert these back into words (more about that in the next section)

So, the embedding and output layer have the same number of weight parameters, as we can see based on the shape of their weight matrices: the next chapter

However, a quick note about its size: we previously referred to it as a 124M parameter model; we can double check this number as follows:

print("Token embedding layer shape:", model.tok_emb.weight.shape)

print("Output layer shape:", model.out_head.weight.shape)

Token embedding layer shape: torch.Size([50257, 768])

Output layer shape: torch.Size([50257, 768])

In the original GPT-2 paper, the researchers reused the token embedding matrix as an output matrix Correspondingly, if we subtracted the number of parameters of the output layer, we’d get a 124M parameter model:

total_params_gpt2 = total_params - sum(p.numel() for p in model.out_head.parameters())

print(f"Number of trainable parameters considering weight tying: {total_params_gpt2:,}")

Number of trainable parameters considering weight tying: 124,412,160

In practice, I found it easier to train the model without weight-tying, which is why we didn’t implement it here However, we will revisit and apply this weight-tying idea later when we load the pretrained weights in Chapter 6 Lastly, we can compute the memory requirements of the model as follows, which can be a helpful reference point:

# Calculate the total size in bytes (assuming float32, 4 bytes per parameter)

total_size_bytes = total_params * 4

# Convert to megabytes

total_size_mb = total_size_bytes / (1024 * 1024)

print(f"Total size of the model: {total_size_mb:.2f} MB")

Total size of the model: 621.83 MB

Exercise: you can try the following other configurations, which are referenced in the GPT-2 paper, as well.

**GPT2-small** (the 124M configuration we already implemented):

"emb_dim" = 768

"n_layers" = 12

"n_heads" = 12

**GPT2-medium:**

"emb_dim" = 1024

"n_layers" = 24

"n_heads" = 16

**GPT2-large:**

"emb_dim" = 1280

"n_layers" = 36

"n_heads" = 20

**GPT2-XL:**

"emb_dim" = 1600

"n_layers" = 48

"n_heads" = 25

41.7. Generating text#

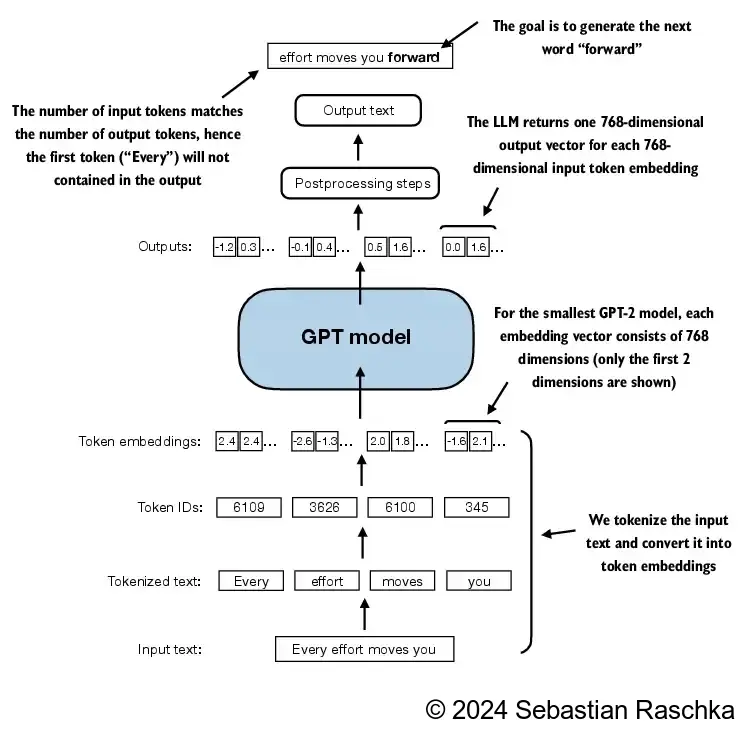

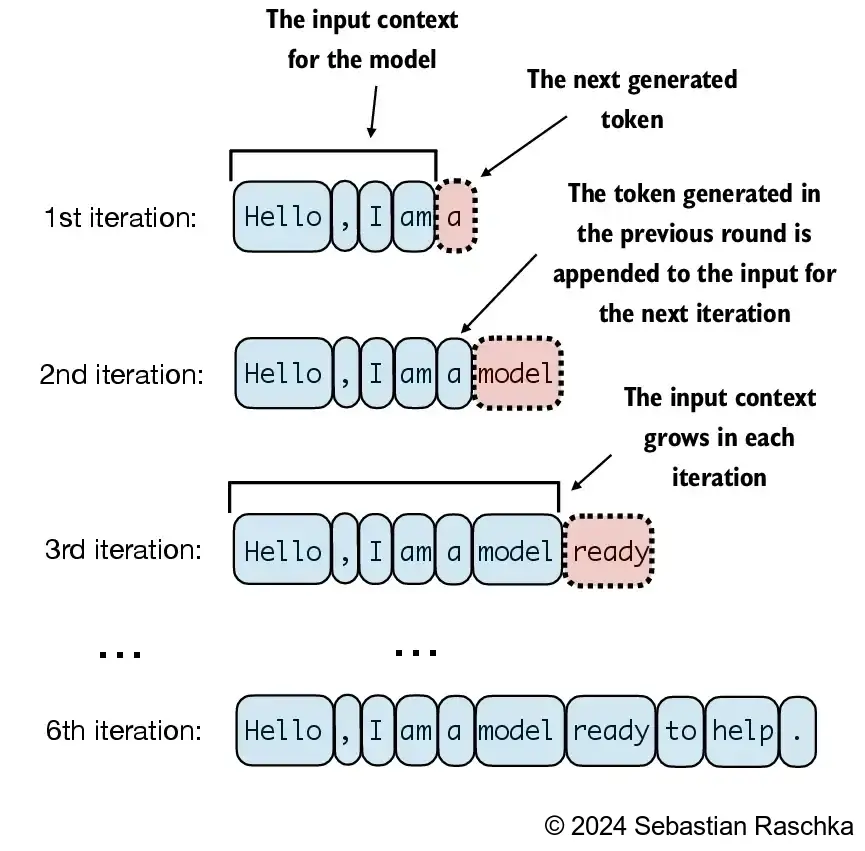

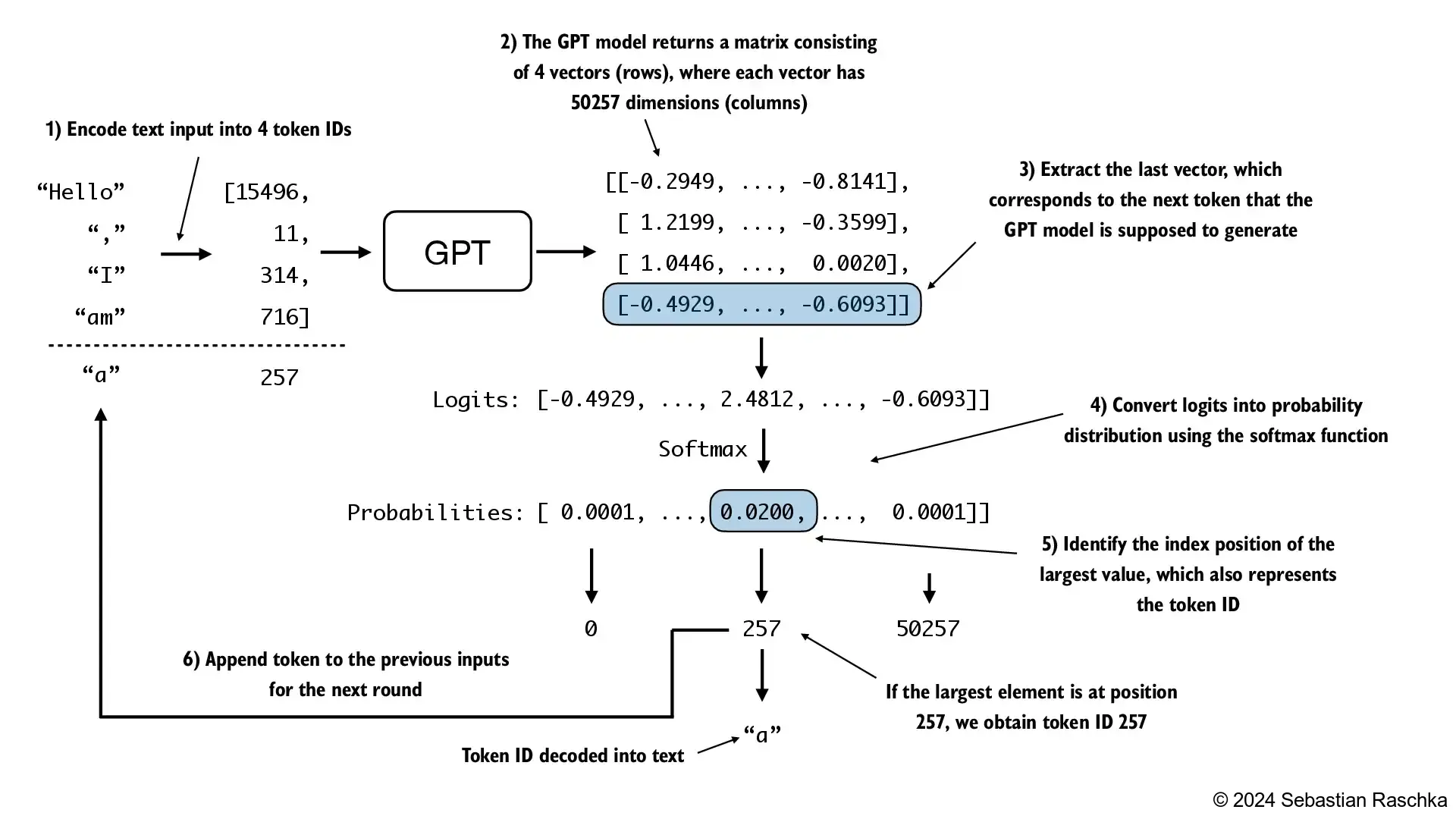

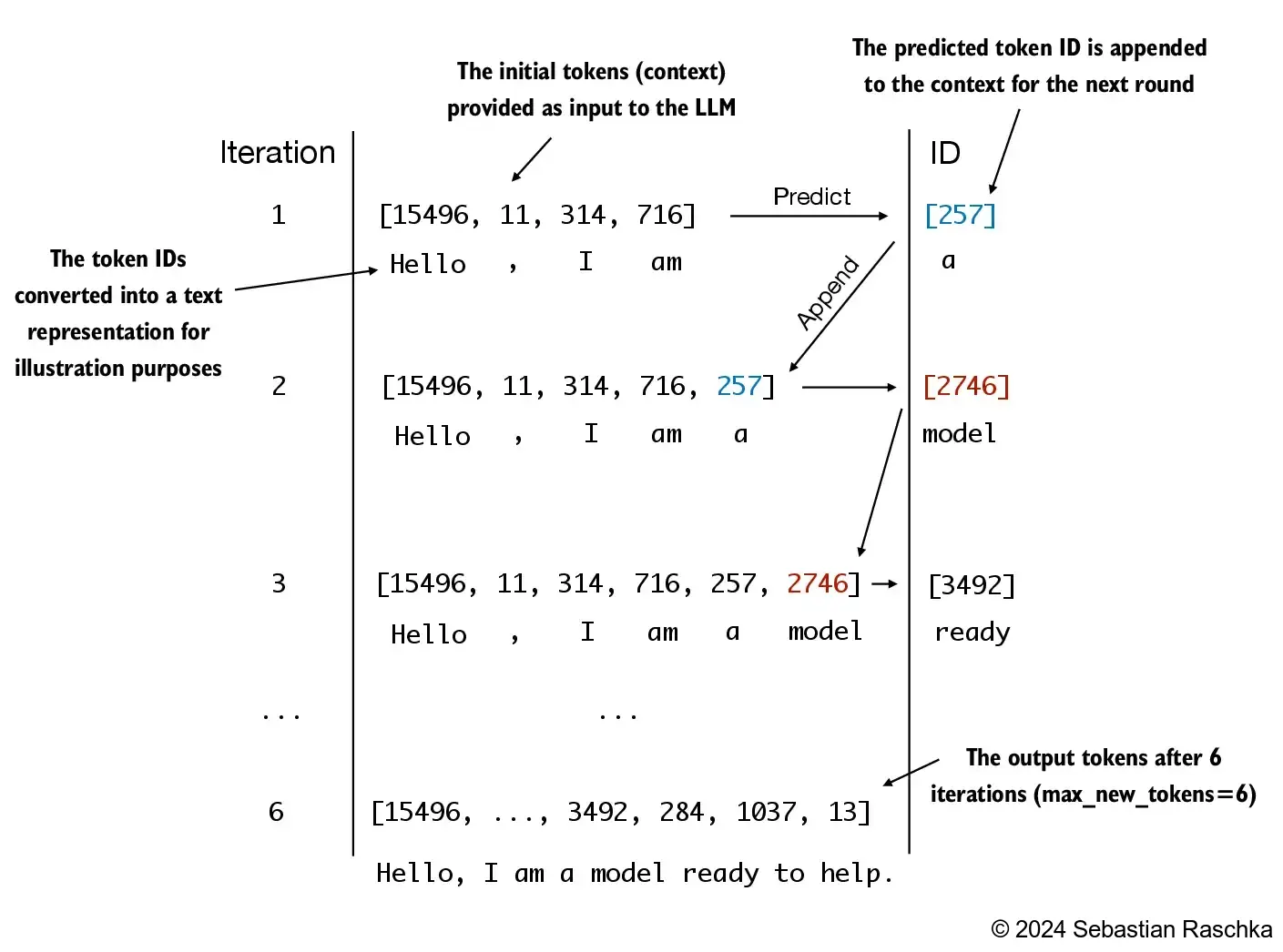

LLMs like the GPT model we implemented above are used to generate one word at a time

The following generate_text_simple function implements greedy decoding, which is a simple and fast method to generate text

In greedy decoding, at each step, the model chooses the word (or token) with the highest probability as its next output (the highest logit corresponds to the highest probability, so we technically wouldn’t even have to compute the softmax function explicitly)

In the next chapter, we will implement a more advanced generate_text function

The figure below depicts how the GPT model, given an input context, generates the next word token

def generate_text_simple(model, idx, max_new_tokens, context_size):

# idx is (batch, n_tokens) array of indices in the current context

for _ in range(max_new_tokens):

# Crop current context if it exceeds the supported context size

# E.g., if LLM supports only 5 tokens, and the context size is 10

# then only the last 5 tokens are used as context

idx_cond = idx[:, -context_size:]

# Get the predictions

with torch.no_grad():

logits = model(idx_cond)

# Focus only on the last time step

# (batch, n_tokens, vocab_size) becomes (batch, vocab_size)

logits = logits[:, -1, :]

# Apply softmax to get probabilities

probas = torch.softmax(logits, dim=-1) # (batch, vocab_size)

# Get the idx of the vocab entry with the highest probability value

idx_next = torch.argmax(probas, dim=-1, keepdim=True) # (batch, 1)

# Append sampled index to the running sequence

idx = torch.cat((idx, idx_next), dim=1) # (batch, n_tokens+1)

return idx

The generate_text_simple above implements an iterative process, where it creates one token at a time

Let’s prepare an input example:

b = logits[0, -1, :]

b[0] = -1.4929

b[1] = 4.4812

b[2] = -1.6093

print(b[:3])

torch.softmax(b, dim=0)

tensor([-1.4929, 4.4812, -1.6093], grad_fn=<SliceBackward0>)

tensor([ 0.0000, 0.0012, 0.0000, ..., 0.0000, 0.0000,

0.0000], grad_fn=<SoftmaxBackward0>)

start_context = "Hello, I am"

encoded = tokenizer.encode(start_context)

print("encoded:", encoded)

encoded_tensor = torch.tensor(encoded).unsqueeze(0)

print("encoded_tensor.shape:", encoded_tensor.shape)

encoded: [15496, 11, 314, 716]

encoded_tensor.shape: torch.Size([1, 4])

model.eval() # disable dropout

out = generate_text_simple(

model=model,

idx=encoded_tensor,

max_new_tokens=6,

context_size=GPT_CONFIG_124M["ctx_len"]

)

print("Output:", out)

print("Output length:", len(out[0]))

Output: tensor([[15496, 11, 314, 716, 27018, 24086, 47843, 30961, 42348, 7267]])

Output length: 10

Remove batch dimension and convert back into text:

decoded_text = tokenizer.decode(out.squeeze(0).tolist())

print(decoded_text)

Hello, I am Featureiman Byeswickattribute argue

41.8. Your turn! 🚀#

tbd.

41.9. Acknowledgments#

Thanks to Sebastian Raschka for creating the open-source course Build a Large Language Model (From Scratch). It inspires the majority of the content in this chapter.